pgflow 0.7.0: Public Beta with Map Steps and Documentation Redesign

pgflow 0.7.0 is here - a major milestone that brings parallel array processing, production-ready stability, and a complete documentation redesign.

pgflow Enters Public Beta

Section titled “pgflow Enters Public Beta”pgflow has transitioned from alpha to public beta. Core functionality is stable and reliable, with early adopters already running pgflow in production environments.

This milestone reflects months of testing, bug fixes, and real-world usage feedback. The SQL Core, DSL, and Edge Worker components have proven robust across different workloads and deployment scenarios.

See the project status page for production recommendations and known limitations.

Map Steps

Section titled “Map Steps”Map steps enable parallel array processing by automatically creating multiple tasks - one for each array element.

import { Flow } from '@pgflow/dsl/supabase';

const BatchProcessor = new Flow<string[]>({ slug: 'batch_processor', maxAttempts: 3,}) .map( { slug: 'processUrls' }, async (url) => { // Each URL gets its own task with independent retry return await scrapeWebpage(url); } );Why this matters:

When processing 100 URLs, if URL #47 fails, only that specific task retries - the other 99 continue processing. With a regular step, one failure would retry all 100 URLs.

This independent retry isolation makes flows more efficient and resilient. Each task has its own retry counter, timeout, and execution context.

Map steps handle edge cases automatically:

- Empty arrays complete immediately without creating tasks

- Type violations fail gracefully with stored output for debugging

- Results maintain array order regardless of completion sequence

Learn more: Map Steps and Process Arrays in Parallel

Array Steps

Section titled “Array Steps”The new .array() method provides compile-time type safety for array-returning handlers:

// Enforces array return type at compile timeflow.array({ slug: 'items' }, () => [1, 2, 3]); // Valid

flow.array({ slug: 'invalid' }, () => 42); // Compile errorArray steps are a semantic wrapper that makes intent clear and moves type errors from .map() to .array(). When a map step depends on a regular step that doesn’t return an array, the compiler catches it too - .array() just makes the error location more precise and the code intention explicit.

TypeScript Client - Now Fully Documented

Section titled “TypeScript Client - Now Fully Documented”The @pgflow/client package, initially released in v0.4.0 but never widely announced, now has complete documentation. This type-safe client powers the pgflow demo and provides both promise-based and event-based APIs for starting workflows and monitoring real-time progress from TypeScript environments (browsers, Node.js, Deno, React Native).

Features include type-safe flow management with automatic inference from flow definitions, real-time progress monitoring via Supabase broadcasts, and extensive test coverage.

Learn more: TypeScript Client Guide | @pgflow/client API Reference

Documentation Restructure and New Landing Page

Section titled “Documentation Restructure and New Landing Page”The entire documentation has been reorganized from a feature-based structure to a user-journey-based structure, making it easier to find what you need at each stage of using pgflow.

| Before | After |

|---|---|

|  |

New documentation includes:

- Build section - Guides for starting flows, organizing code, processing arrays, and managing versions

- Deploy section - Production deployment guides for Supabase

- Concepts - Understanding map steps, context object, data model, and architecture

- @pgflow/client API Reference - Complete client library documentation

Redesigned Landing Page

Section titled “Redesigned Landing Page”The homepage has been completely rebuilt with animated DAG visualization, interactive before/after code comparisons, and streamlined messaging. Visit pgflow.dev to explore the new experience.

Developer Experience Improvements

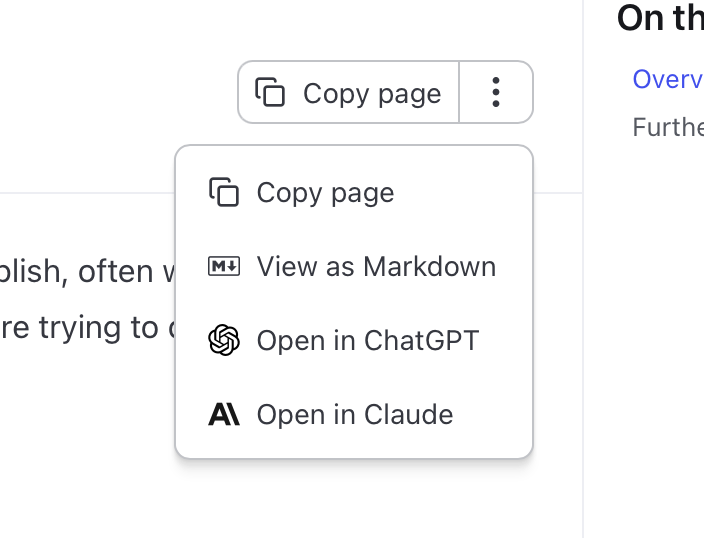

Section titled “Developer Experience Improvements”Copy to Markdown on All Docs Pages - Every documentation page now includes contextual menu buttons to copy the page as markdown or open it directly in Claude Code or ChatGPT for context-aware assistance.

Additional improvements:

- Full deno.json Support -

pgflow compilenow uses--configflag for complete deno.json support - Fixed config.toml Corruption - CLI no longer corrupts minimal config.toml files (thanks to @DecimalTurn)

- Better Type Inference - Improved DSL type inference for

.array()and.map()methods - Compile-time Duplicate Slug Detection - Prevents duplicate step slugs before deployment

Reliability Improvements

Section titled “Reliability Improvements”- Enhanced Failure Handling - Automatic archival of queued messages when runs fail, with stored output for debugging

- Fixed Data Pruning Bug - Resolved foreign key constraint issue preventing cleanup operations

- Comprehensive integration tests for map steps and enhanced type testing infrastructure

Upgrading to 0.7.0

Section titled “Upgrading to 0.7.0”See the update guide for complete instructions.

Questions or issues with the upgrade? Join our Discord community or open an issue on GitHub.